Content-defined chunking runs approximately as fast as gzip and can achieve compression ratios better than 100:1 on certain classes of data.[1]

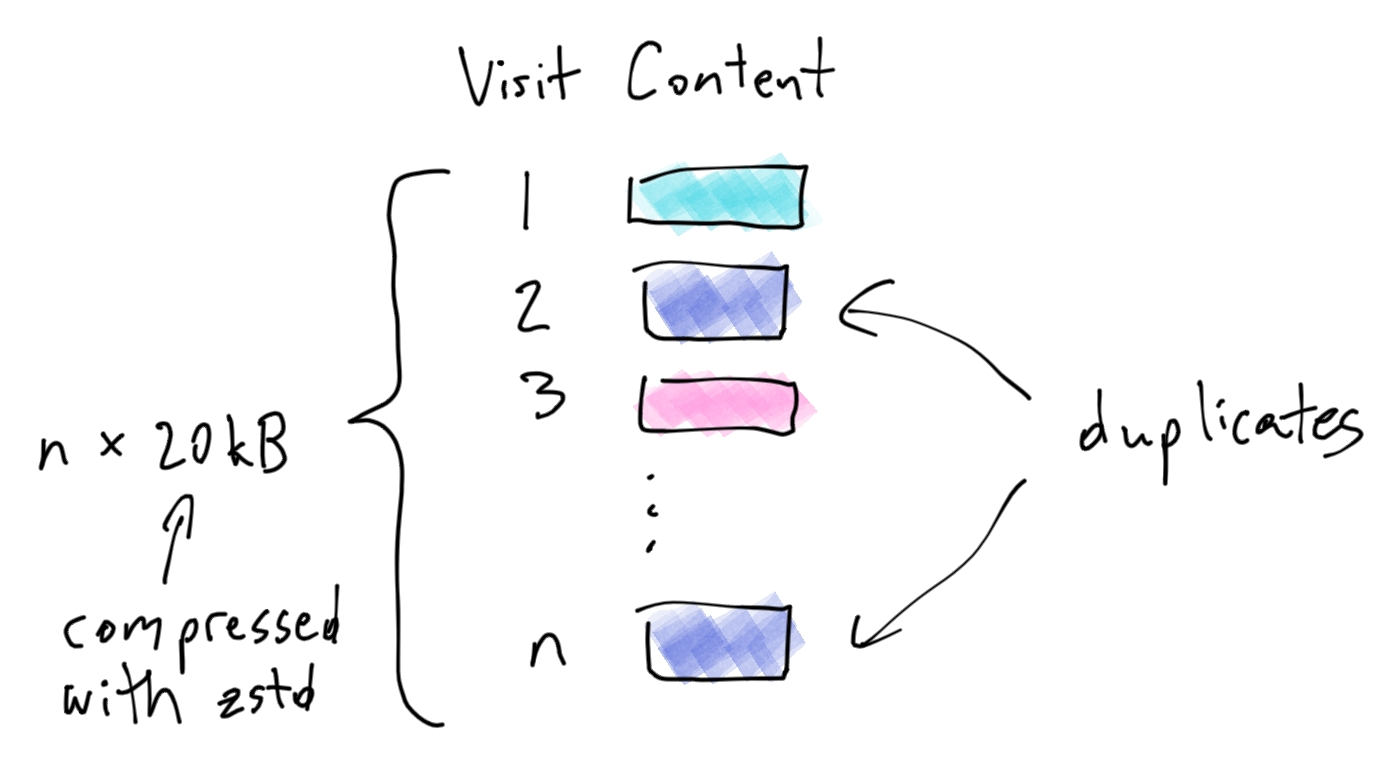

Suppose we have several webpages that frequently get updated, and we want to store every update of every page. The most naive way to do it would be to make a full copy of the visited page every time, and use a standard compression algorithm like zstd on each copy. For simplicity, let's say that each page is about 100kB and that the compression ratio of zstd on the typical page is 5:1.[2]

To store n updates, we would require n * 20kB of storage. Can we do better? We notice that occasionally, one of the updates is identical to a previous one. That sounds like an opportunity to improve. Before saving, we hash the content to check for duplication. We only save the content and